In the world of machine learning, we often encounter complex problems, from image recognition to natural language processing. However, let’s take a step back and explore something more elementary yet equally intriguing – addition! Yes, you read that right – addition. In this blog post, we’ll embark on a journey to build a neural network that can learn the art of addition of 2 numbers.

A quick note before we get into this. It is not a recommended approach to use machine learning to find the sum of 2 numbers. I tried this, out of curiosity when I started learning machine learning. I wished to share this with you all to make learning fun.

Let’s brush up the basics of deep learning before we jump into the exercise.

Table of Contents

The Basics

There are a few terminologies used in machine / deep learning, which I’ll be using in the exercise. So, it’s better to understand them on a high level in a couple of sentences each.

Neural Network

A computational model inspired by the structure and function of the human brain. It consists of interconnected nodes (neurons) organized in layers. Neural networks are trained on data to learn patterns and make predictions.

Activation Function

A function applied to the output of a neuron to introduce non-linearity. It allows neural networks to learn complex relationships in data. Common activation functions include ReLU (Rectified Linear Unit) and Sigmoid.

Loss Function

A measure of how well a model’s predictions match the true target values. During training, the goal is to minimize the loss function, guiding the model to make better predictions.

Gradient Descent

An optimization algorithm used to minimize the loss function. It adjusts the model’s parameters iteratively in the direction of steepest descent, guided by the gradients of the loss function with respect to the parameters.

Backpropagation

A fundamental algorithm in training neural networks. It calculates the gradients of the loss function with respect to each model parameter and propagates them backward through the network to update the weights during gradient descent.

Batch Size

The number of training samples used in one forward/backward pass during training. Larger batch sizes can speed up training but require more memory.

Epoch

One complete iteration through the entire training dataset during training.

These are just a few of the many terminologies you’ll encounter in the vast field of machine and deep learning. However, it is enough to learn the terminologies I mentioned above to understand the following exercise.

Prerequisites

There’s a checklist to get started with machine learning. It is recommended to have them installed and ready. However, it’s not mandatory.

- Install Anaconda

- Anaconda is packaged with many default machine learning libraries

- Create an environment is Anaconda

- This is highly recommended. Because, only the created environment will be affected if something goes wrong. Entire Anaconda installation will not be affected

- Visual Studio Code IDE

- Install Keras

- This requirement is specific to this exercise

Do you have all these items ready? Hope you’re excited. Let’s jump into our exercise.

Sum of 2 Numbers using Machine learning

Create a folder and a file

Create a new folder with any name. Navigate into the folder and create a file named addition.ipynb. Open the folder in Visual Studio Code IDE.

Open the addition.ipynb file. Create code blocks for each of the following section by pressing the “+ Code” button at the top left of the VS Code.

Import libraries

Import numpy and keras libraries

import numpy as np

from keras.models import Sequential

from keras.layers import DensePrepare the data

The accuracy of machine learning model solely depend on the data with which we train our model. To create addition data, let’s create a pair of 1000 random numbers which will be considered as our input. The output will be the sum of items in each pair.

num_samples = 1000

X_train = np.random.rand(num_samples, 2)

y_train = X_train[:, 0] + X_train[:, 1]Define the neural network

Let’s build a neural network with 2 input layers, 1 hidden layer with 8 neurons, and a output layer with a single neuron. We’ll use the “relu” activation function.

model = Sequential()

model.add(Dense(8, input_shape=(2,), activation='relu'))

model.add(Dense(1))Compile the model

Compile the model using the MSE (Mean Squared Error) as loss function and Adam optimizer.

model.compile(loss='mse', optimizer='adam')Train the model

Train the model for 100 epochs, with a batch size of 32.

batch_size = 32

epochs = 100

model.fit(X_train, y_train, batch_size=batch_size, epochs=epochs, verbose=1)This may take few seconds depending on your CPU configuration. It consumed around 10 to 15 seconds in my laptop to complete.

Test the model

As we have trained our model, let’s test it with a few custom inputs. I have taken 2 inputs. However, you can test your model with any number of inputs.

test_input = np.array([[1, 2], [0.3, 0.4]])

predicted_sum = model.predict(test_input)Print the values

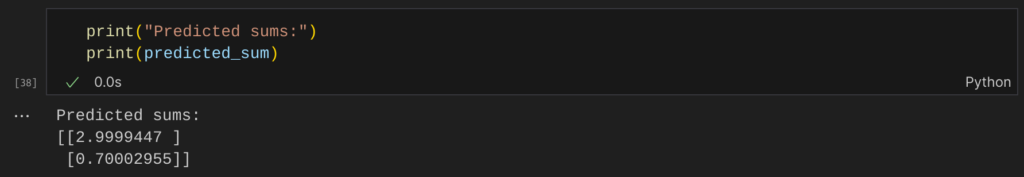

The prediction is complete. Let’s see if they’re right by printing the predicted values.

print("Predicted sums:")

print(predicted_sum)

Almost close. Isn’t it?

Conclusion

In this blog, we learnt about building a neural network to perform addition.

However, as I mentioned at the beginning, this is not a recommended approach to use machine learning to find the sum of 2 numbers.

If you’re so curious, you can try building a neural network to perform subtraction. All the best.

Hope you enjoyed reading this article. If you wish to learn more about artificial intelligence / machine learning / deep learning, subscribe to my article by entering your email address in the below box.

Have a look at my site which has a consolidated list of all my blogs.